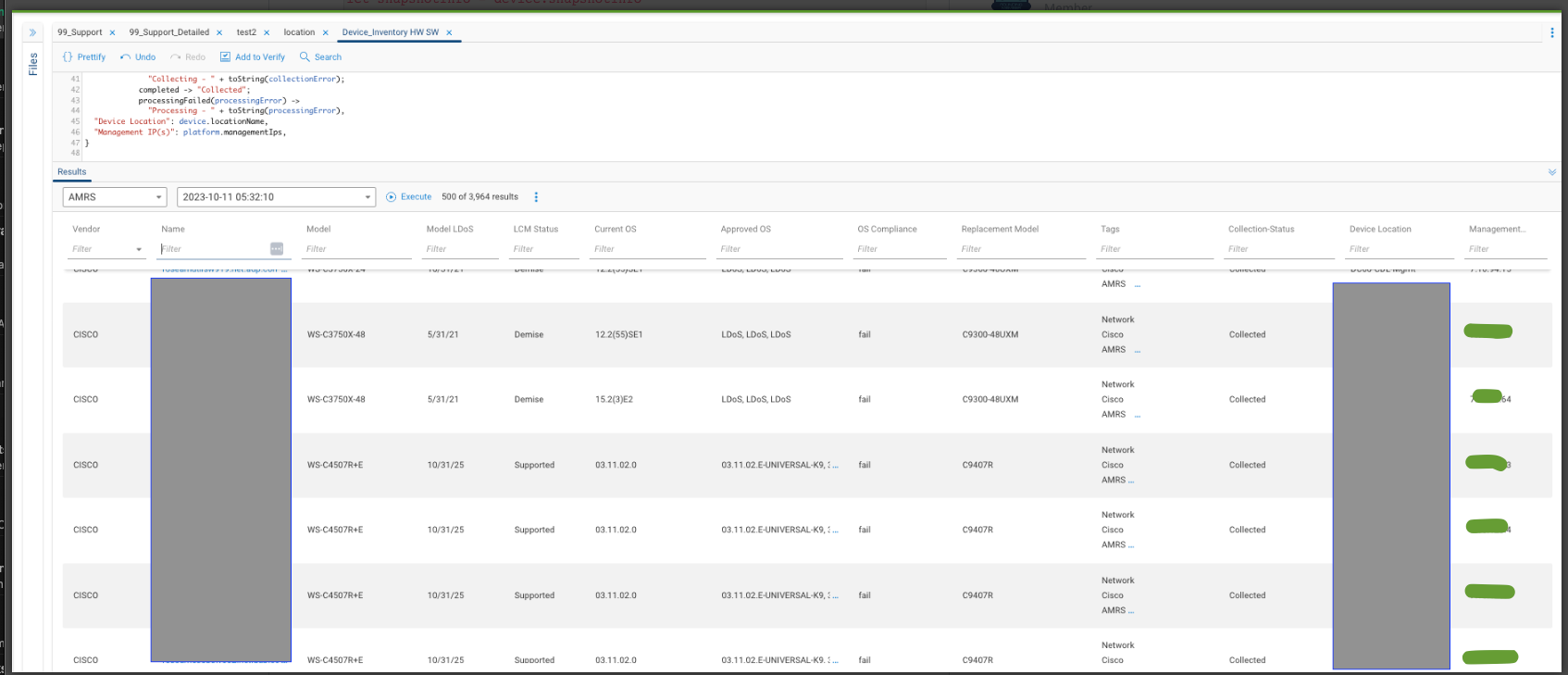

I have put together a query to enhance inventory by adding LDoS dates and ‘approved software’. It has greatly improved our Life Cycle Mgmt. conversations. The CSV Files reside separately in a ‘standards’ folder. (there are just too many fields to keep in the same script).

The resulting output provides:

Vendor | Hostname | Model | Location | Vendor LDoS Date | HW Compliance Date | Current OS | Approved OS | Compliance to OS | Replacement Mode | And more

/**

* @intent Get OS Versions of all models and compare to ADP and Vendor Standards

*/

// Get TagName Function - If you put tagnames on objects in forward Networks and you want to pull them out into columns.

// You can define the tags in the export functions below and pull them out as a column. Below you can see the

// Tags in "xxx" that we want to pull - ex ["DC", "CoLo", "Branch", "Cloud", "AWS"]);

// Environment: get_env_from_tags(device.tagNames),

// Region: get_region_from_tags(device.tagNames),

// Function: get_function_from_tags(device.tagNames),

export get_list_match_from_tags(tags: List<String>, expectedValues: List<String>) =

max(foreach v in expectedValues

where v in tags

select v);

export get_env_from_tags(tags: List<String>) =

get_list_match_from_tags(tags, ["DC", "CoLo", "Branch", "Cloud", "AWS"]);

export get_region_from_tags(tags: List<String>) =

get_list_match_from_tags(tags, ["EMEA", "AMRS", "APAC", "LATM"]);

export get_function_from_tags(tags: List<String>) =

get_list_match_from_tags(tags, ["Firewall",

"LB",

"Proxy",

"WLC",

"Router",

"Switch",

"Controller"

]);

//

/**

* @intent Replace OS versions with more appropriate OS version for queries

* @description The goal of this NQE util is to easily allow users to create queries that show

* more appropriate sofware OS numbers, for example removing build numbers

*/

// Get Version Function

pattern_tmos =

```

Sys::Version

Main Package

Product BIG-IP

Version {versionNumber:string}

```;

rSeriesModelRegex = re`^r\d+`;

rSeriesSoftwareRegex = re`(^[^\-]+)`;

removeRSeriesbuild(version) =

foreach match in regexMatches(version, rSeriesSoftwareRegex)

select match.string;

getTMOSVersion(d: Device) =

foreach command in d.outputs.commands

where command.commandType == CommandType.VERSION

let parsed_config = parseConfigBlocks(OS.F5, command.response)

let customVersionNumber = max(foreach match

in blockMatches(parsed_config, pattern_tmos)

select match.data.versionNumber)

select customVersionNumber;

getF5Version(device) =

if hasMatch(device.platform.model, rSeriesModelRegex)

then removeRSeriesbuild(device.platform.osVersion)

else getTMOSVersion(device);

export getVersion(d: Device) =

foreach device in network.devices

where device.name == d.name

select if d.platform.vendor == Vendor.F5

then max(getF5Version(device))

else device.platform.osVersion;

// This is the work around for Nexus to distinguish against ACI and Non-ACI devices`

// cisco Nexus9500 C9508 (8 Slot) Chassis ("Supervisor Module")

NexusPattern = ```

Hardware

cisco Nexus9000 {modelName:string}

```;

getModel(device) =

foreach x in [0]

foreach command in device.outputs.commands

where command.commandType == CommandType.VERSION

let blocks = parseConfigBlocks(OS.NXOS, command.response)

let nexusModel = max(foreach match in blockMatches(blocks, NexusPattern)

select match.data.modelName)

let model = if isPresent(nexusModel)

then join(" ", ["Nexus9000", nexusModel])

else device.platform.model

select model;

//

export Software =

"""csv

VENDOR,ENV,OWNER,MODEL,N+1,N,N-1,N-2,DATE

ARISTA,DC,AristaOwner,DCS-7010T-48,None,4.28.8.1M,4.28.3M,None,1/1/2024

ARISTA,DC,AristaOwner,DCS-7050SX-128-F,None,4.28.8.1M,4.28.3M,None,1/1/2024

ARISTA,DC,NexusOwner,DCS-7050SX-128-R,None,4.28.8.1M,4.28.3M,None,1/1/2024

ARISTA,DC,NexusOwner,DCS-7050SX-64-F,None,4.28.8.1M,4.28.3M,None,1/1/2024

CISCO,CoLo,BranchOwner,CISCO2851,LDOS,12.4(24)T2,LDOS,LDOS,None

Cisco,Branch,BranchOwner,C9130AXI-A,None,17.9.6,None,None,6/25/2025

Cisco,Branch,BranchOwner,C9136I-Z,None,17.9.6,None,None,6/25/2025

CISCO,Branch,BranchOwner,ASR1001-HX,None,17.09.04a,17.09.03a,17.06.04,1/1/2024

CISCO,Branch,BranchOwner,C8300-2N2S-4T2X,None,17.09.05f,17.09.05e,17.09.04a,2/1/2025

CISCO,Branch,BranchOwner,C9300L-48T-4X,None,17.09.06a,17.09.05,17.09.04a,1/1/2024

CISCO,Branch,BranchOwner,C9407R,None,17.09.06a,17.09.05,17.09.04a,1/1/2024

CISCO,DC,FirewallOwner,FPR4K-SM-48S,None,9.18(3)55,9.12(4)10,9.12(3)2,1/1/2024

CISCO,DC,FirewallOwner,WS-SVC-FWM-1,LDOS,3.2(18),LDOS,LDOS,1/1/2024

F5,DC,LB_Owners,BIG-IP i10800,None,17.1.2.1,17.1.1.3,15.1.10.3,1/1/2024

F5,DC,LB_Owners,BIG-IP Tenant,None,17.1.2.1,17.1.1.3,15.1.10.3,1/1/2024

PALO,DC,FirewallOwner,PA-VM,None,11.1.6-h7, 11.1.6-h3,11.1.5-h1,7/8/2025

PALO,DC,FirewallOwner,PA-5450,None,11.1.6-h3,11.1.2-h3,10.1.8-h7,3/17/2025

""";

//

// Original Query

main =

foreach device in network.devices

where device.name == device.system.physicalName ||

device.platform.vendor == Vendor.PALO_ALTO_NETWORKS &&

matches(device.name, "*_vsys1")

// where device.name == device.system.physicalName

where isPresent(device.platform.osVersion)

let os = getVersion(device)

foreach entry in Software

where device.platform.model == entry.MODEL

let OSversion = if device.platform.vendor != Vendor.F5

then toString(device.platform.osVersion)

else max(getVersion(device))

let ModelFix = if device.platform.os == OS.NXOS && !("ACI" in device.tagNames)

then max(getModel(device))

else device.platform.model

foreach entry in Software

where ModelFix == entry.MODEL

// let Software= join(", ", [entry.N_11, entry.N_2])

let approved_sw = join(", ", [entry.N, entry.N_11, entry.N_2])

select {

Vendor: entry.VENDOR,

Environment: get_env_from_tags(device.tagNames),

Region: get_region_from_tags(device.tagNames),

Function: get_function_from_tags(device.tagNames),

Type: device.platform.deviceType,

Name: device.name,

model: ModelFix,

"Current-OS": OSversion,

"Approved OS": approved_sw,

"ADP N Compliance": if OSversion in [entry.N] then "Pass" else "Fail",

"ADP N/N-1/N-2 Compliance": if OSversion in [entry.N, entry.N_11, entry.N_2]

then "Pass"

else "Fail",

// "Prior Versions (n-1 n-2)": Software,

Location: device.locationName,

Tags: device.tagNames,

"Management IP(s)": device.platform.managementIps

};

diffs =

foreach x in [1]

let names = (foreach x in main()

select x.Name)

foreach device in network.devices

where device.name == device.system.physicalName ||

device.platform.vendor == Vendor.PALO_ALTO_NETWORKS &&

matches(device.name, "*_vsys1")

where device.name not in names

select {

name: device.name,

vendor: device.platform.vendor,

version: device.platform.osVersion,

platform: device.platform,

device

};

export analytics_sw_compliance = main();

diffs()

// main()